Simulated Self-Driving Car

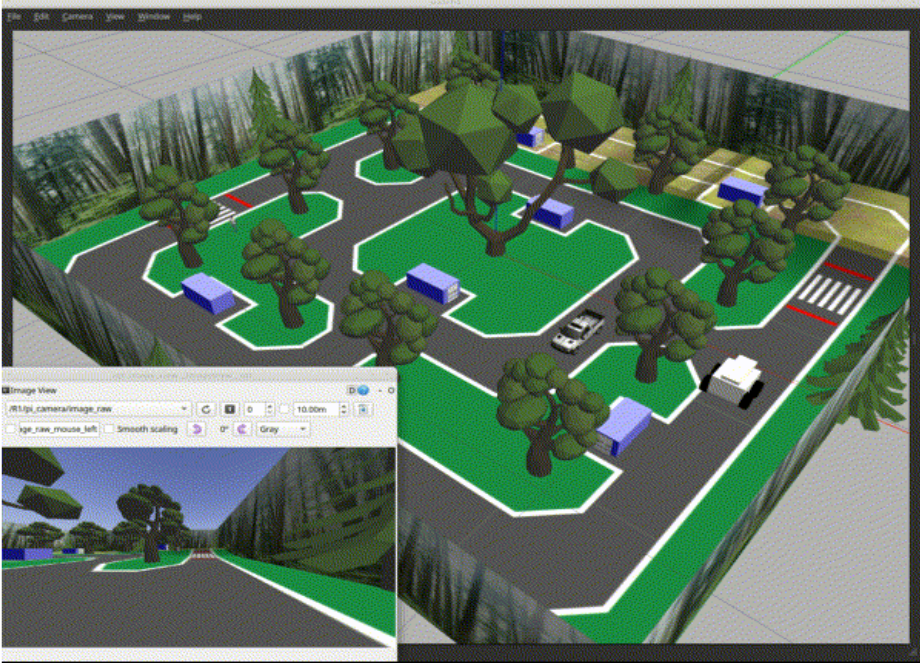

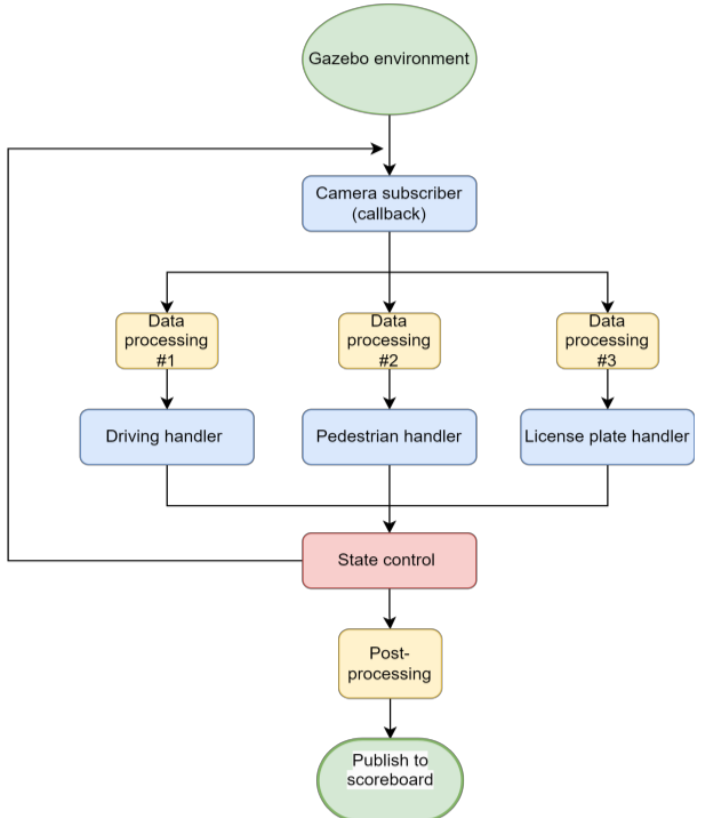

Project Goal: Program a self-driving robot in a Gazebo simulation to detect vehicles and pedestrians, obey traffic rules, and read license plates.

Outcome: Placed 1st out of 16 teams and obtained the maximum possible points. The car is controlled by multiple convolutional neural networks (CNNs) that decide driving direction and perform optical character recognition on license plates.

Teammate: Chris Yoon | LinkedIn

Links: Poster PDF, Video Demo, Design Report, GitHub Repo, Course Info

Key Features:

Self-driving

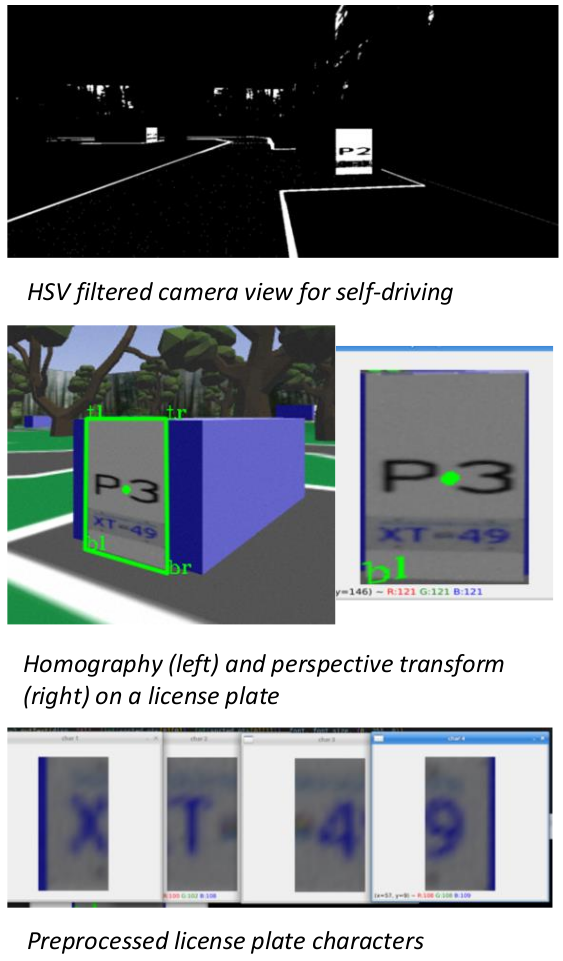

- Image preprocessing using OpenCV and HSV filtering to remove any unnecessary information for input

- Built and trained a convolutional neural network (CNN) using TensorFlow, outputting linear and angular velocities

License plate reading

- Combined homography and perspective transforms to ensure a consistent view of the individual characters for input

- Built and trained CNNs to determine parking ID, alphabets, and numbers individually

Data collection

- Trained, cleansed, and labeled 20000+ input images by employing a strong data collection method, resulting in high prediction accuracy of the neural networks

Pedestrian detection

- Integrated several computer vision techniques for crosswalk detection and determining the pedestrian’s position

Images: